OpenAI has announced the release of its brand new model, GPT-4o (o standing for ‘Omni’). Besides many advanced and exciting features in computer vision, real-time translation, or the education field, free users will particularly love one announcement: GPT4-o is available for free users!

What does it mean? With multimodality, any free user can now easily create a website. The model can help you design, write, and launch a website with minimal effort, even if you have no coding experience.

Let’s transform some ideas from pen and paper into a live website using GPT-4o.

What is multimodality?

Multimodality is the application of multiple literacies within one medium. Multiple literacies or “modes” contribute to an audience’s understanding of a composition.[1] Everything from the placement of images to the organization of the content to the method of delivery creates meaning — Wikipedia

GPT-4o offering multimodality means it can handle and generate content in various formats, such as text, images, and more, which can significantly enhance your website creation process, especially if you’re a non-coder. Here’s how these capabilities can help you create a multimodal website.

Text

- You can provide text descriptions or instructions for what you want your website to be. For instance, the homepage layout, the sections’ content, or what kind of blog posts you need.

- The generated content can be optimized for search engines, which will help with higher visibility and generate more organic traffic to your site.

- You can generate blog posts, product descriptions, and pages while ensuring your content is engaging and informative.

Images

- Upload images that you want to include, and let GPT-4 analyze and incorporate them into the site layout. This can be your logo, some product images, banners, etc.

- Use images as inspiration: upload content of what you like to help the model generate media that fits your taste and needs

- Being integrated with tools like DALL-E, GPT-4o can create custom images and illustrations

- It can also help you write scripts for videos, ensuring your content is well-structured and engaging.

Videos

- If you have welcome videos, tutorials, or product demos, you can upload them as video files or links to be included in your website or even have to model create some.

Ready? Let’s start!

As you can see in my other stories, I like using bakeries as an example. So let’s keep it going, I’m now a local baker, and I need a website. I already have some ideas about placement, what I’d like, and where.

My free OpenAI account is created and ready, and I’m now facing my companion. Staring at the screen… What should I say? Ok, let’s try a simple question first.

Hello! Design a website for a local bakery

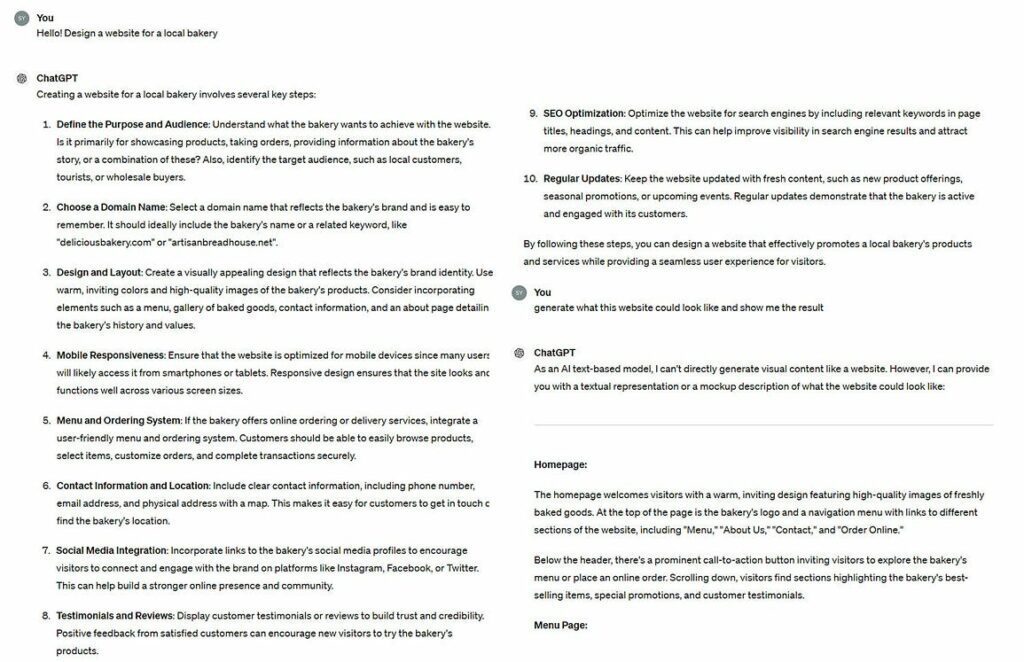

To better grasp the power of GPT-4o, let’s see what the free GPT-3.5 model would have done:

That’s not bad. Not too detailed, but we still get the idea, and eventually have some new ideas we did not think about. But, GPT-3.5 being a text-only model, there is no way to get a glimpse of the possible result.

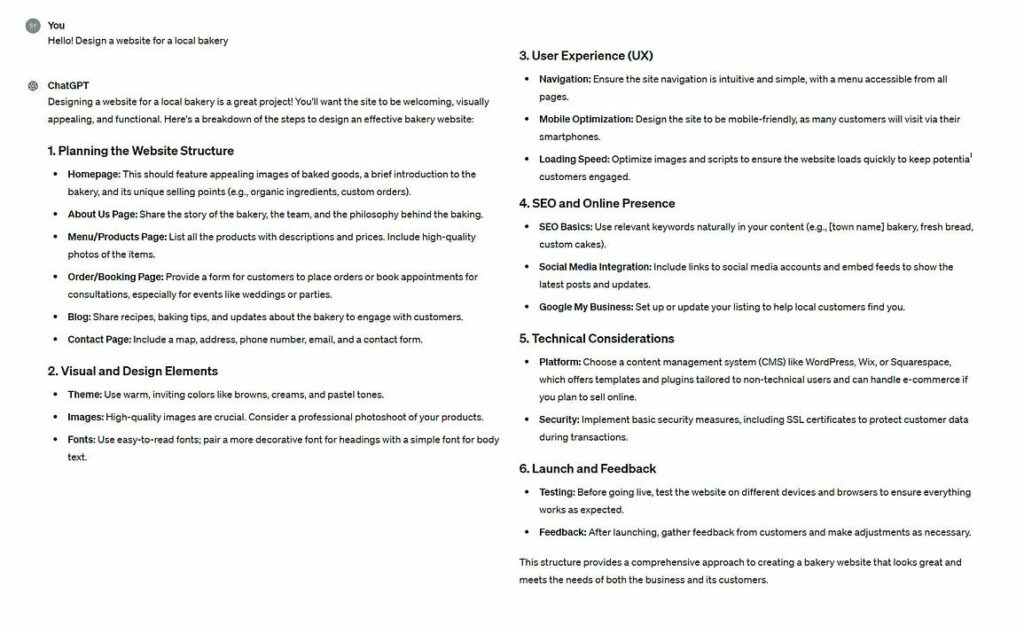

Until yesterday, you had to pay an extra $20/month to access the more powerful model, GPT-4. Let’s give it the same tasks:

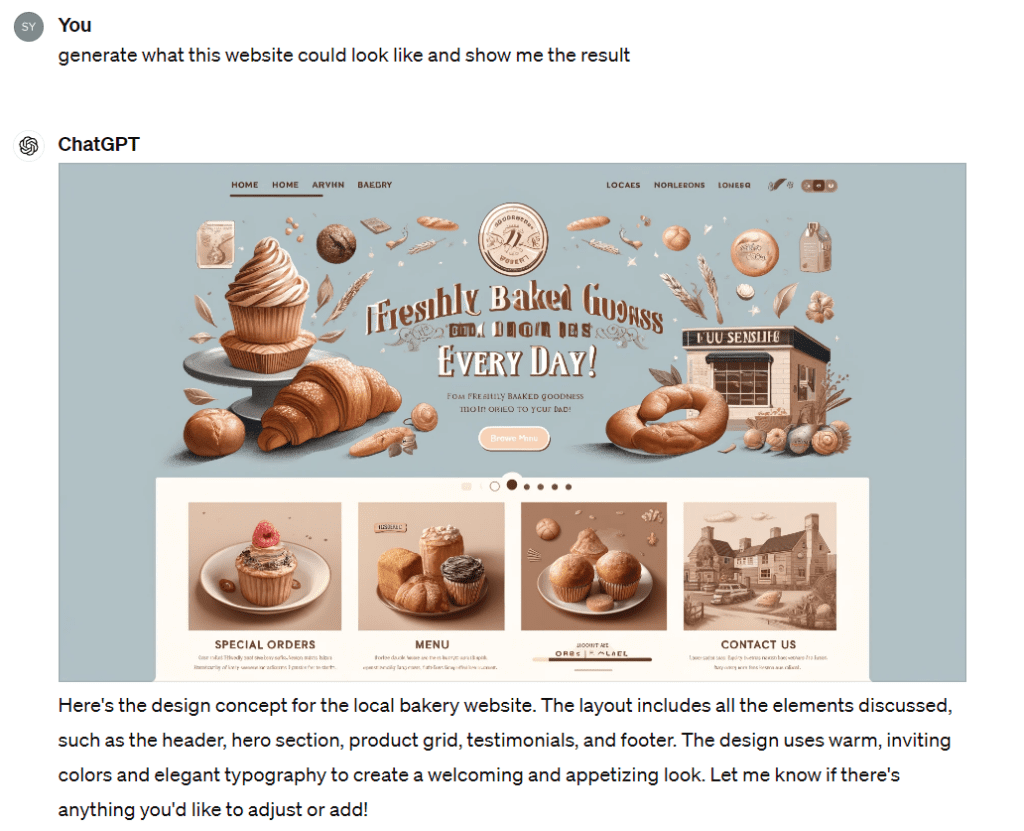

When asked to generate the potential result, here is what we get:

It took about a minute forthe image generation only.

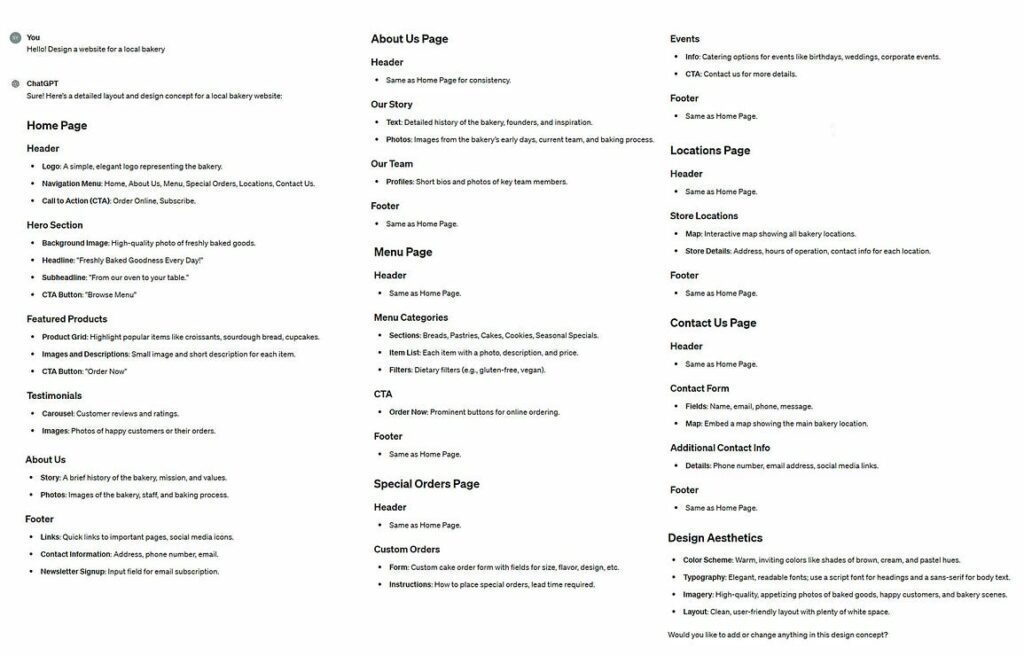

Now to the free GTP-4o:

As you can already see, the results are much more detailed. We have more sections, Call-To-Action buttons, hints about typography, color scheme, and a follow-up question (without adding custom commands, for the most advanced techies reading this post).

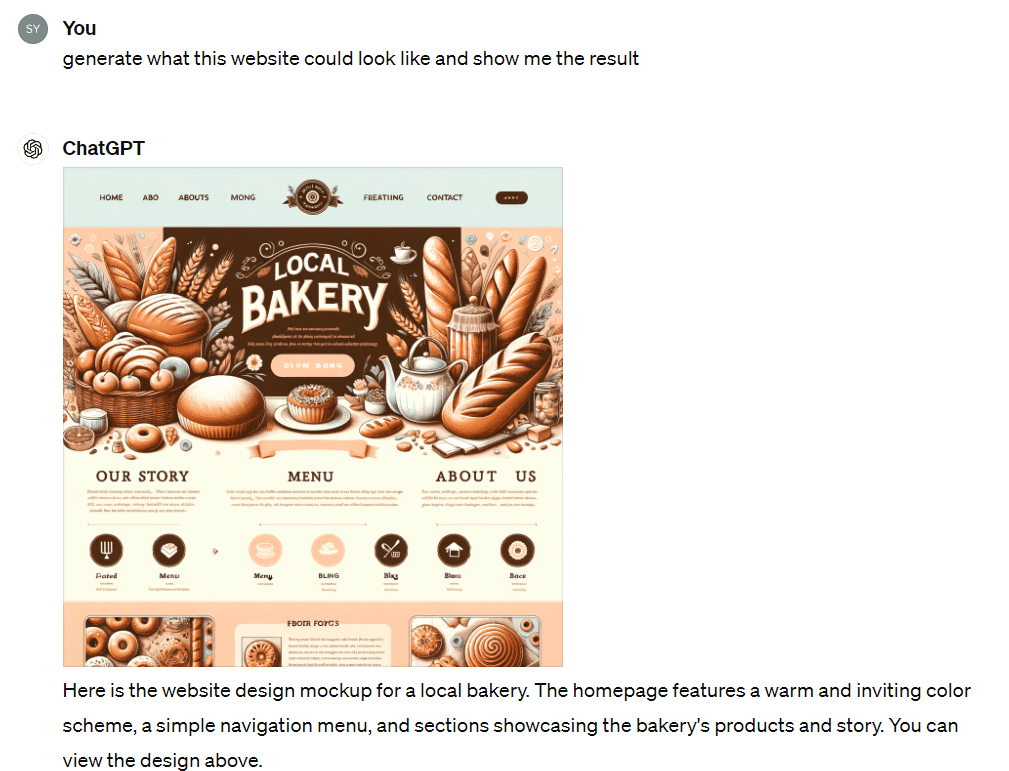

What about the generation of our future website? After less than 20 seconds, here is what I got:

Not that bad for a free model, right?

But you could argue that it does not fit your tastes or your choice of colors. That some sections are not necessary, or that you’d need more items in the menu. Here comes the multimodal part. First, let’s take my pen and paper.

Simple sketch indication

The thing is, I’m a very bad drawer. Which, in this case, is perfect. Here is the sketch I came up with and uploaded before sending the next prompt:

When I first thought about the website, here is the simple design I came up with. Please adapt your proposal to the images & text layout, number and name of the section I sketched in the uploaded image, a regenerate a proposal

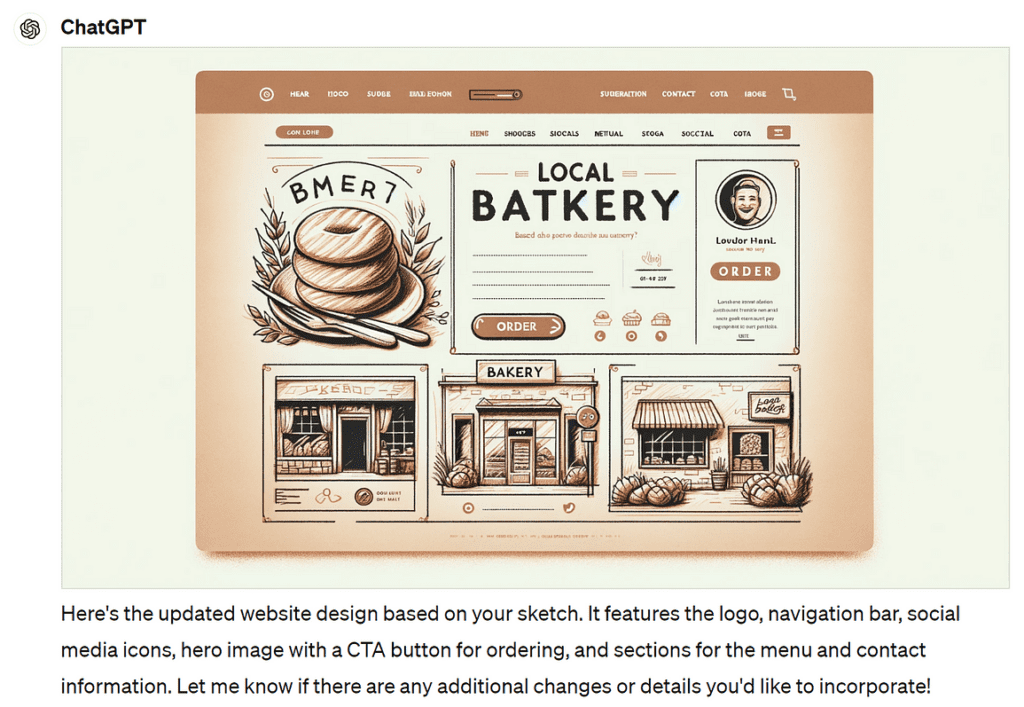

The updated proposal came up as this:

Not exactly what I wanted, but it’s better. We’ll dive in later. Is the model able to wrap it up already?

Please write the code needed to build this website. Follow the up-to-date performance and accessibility best practices. When you’re done, please create a zip archive for me to upload it online.

The process took… 1 minute and 20 seconds.

I could see the content and styles files, the image paths, and the generated zip, in less than 1min30.

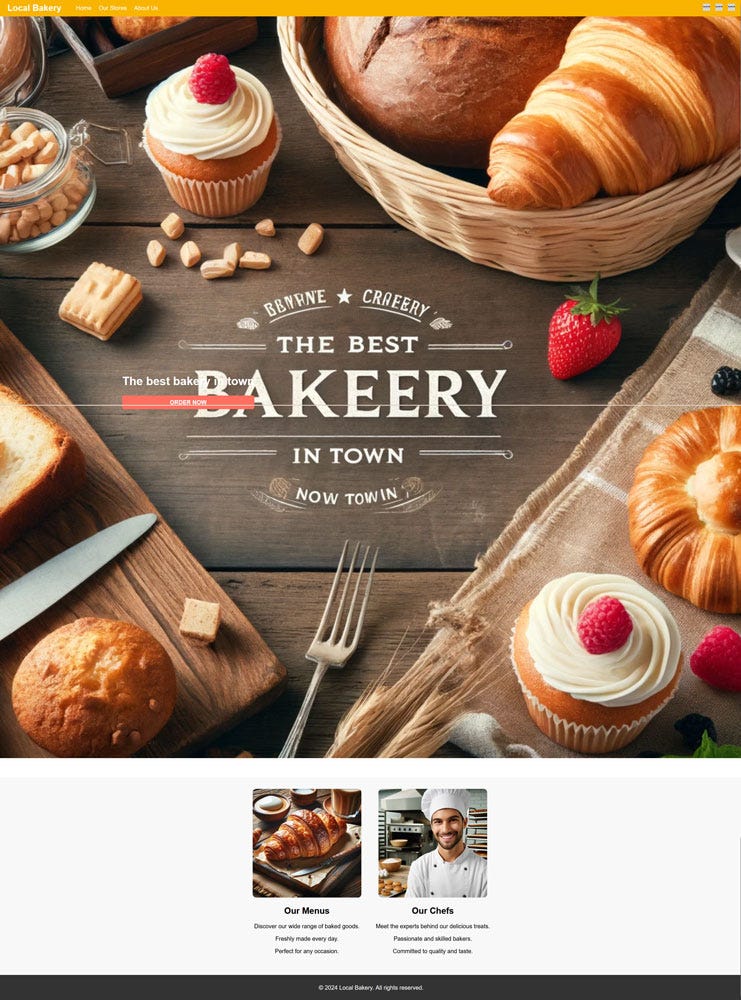

Now, let’s analyze. I have my “Hero image” (for the non-tech people, the “Hero Image” is the main, biggest image displayed on the front page, usually carrying the most important information and action buttons), and my call-to-action button labeled “Order”.

We went from a 5-pages slider zone with sections on the first proposal to a simple zone of 3 sections. I asked for two, though, and I don’t see the name of each section.

Similarly, the navigation bar instructions have not been interpreted correctly. “Social”, in my sketch (and my mind) was to hold the social icons. There are also too many more menu items than needed.

I could (and should) use prompt engineering here, to give it more context and instructions, express my ideas better, and be more precise than I’ve been. But the goal, here, is to test the model’s capacity to deal with simple prompts, helped by documents.

Adding a text file

I’ll add a little more guidance

Thank you. The uploaded document contains more layout instructions and the text I need for the homepage. Adapt your generated image proposal based on that.

You can download the text file here, or get all the resources at the bottom of the article.

That’s way better. Not there yet, but better. Everything I asked for is there.

Sure, the call to action is not centered, the image and text contrast are far from usable, and the header image is above the floating line. But the model seems to follow the instructions.

Can I ask for more?

Using a color scheme

Here are some updated text instructions. They include a color scheme, some new sections, and several specifications about the existing one. Please update your code and the generated images accordingly, and send me a new zip file

I now tell the model which colors I want to use and where, add a little information for the header image, and ask for a footer.

(Base color): #FFFDD0

(Secondary color): #C5A880

(Accent color): #7C4D3A

(Highlight color): #FADADD

(Neutral color): #F5F5DC

Base color: for the background

Secondary and accent colors: for typography and structural components

Highlight color: for interactive elements (buttons and links)

Neutral colors: for section backgrounds

You can download the updated text here.

Once again I get all the updated files, generated images (and the prompt used on Dall-E to reuse if needed), folder structure… And it took less than 3 minutes.

To go further

We only saw the tip of the model’s potential, and we could generate many more things:

- Videos: the whole process took less than 2 minutes for a 10-second video, where GPT-4o created the storyboard, generated the images, and tried to combine them for the final result. Unfortunately, it faced “persistent path problems” and could not render it, but provided me with the exact steps to do it. Here’s the final result following its guidance

- Music: automatically add an audio track file to the generated video

- Interactive quizzes or forms: pass a couple of questions and answer, explain your logic

- Chatbot integration

- etc.

And why not, later on, ask the model to analyze user data and generate tailored recommendations?

Conclusion

Having 20+ years of experience in development, I know, and so do my accessibility specialists, Artistic Director, UX/UI designer, and Fullstack Developer colleagues, that there would be A LOT more to do to succeed in matching the level of an experienced team.

That being said, I have to admit that, the model being free, and having given it very little technical information, could help some professionals build a simple website, fitting their taste, sketches, ideas, and iteration, while guiding them on how to put it online.

Can a minimalist yet effective single page be built this way? Definitely.

Even for this usage, would it need more work than I did here in 10 to 15 minutes, with my sketch as a base? Yes, of course.

Am I afraid for me and my colleagues’ work? No. At least not yet. There is so much more to a website than its appearance. Personas, Product, Quality, performance, Responsive Design, Stack choice, traction, SEO, security, DPO, Analysis…

However, GPT-4o can help people understand these fields of competence better and guide them in the right direction while improving the overall quality of web content and empowering more people to build an online presence without the need for extensive technical knowledge.

In the end, am I impressed by the (r)evolution offered, for free, by GPT-4o? To be honest, yes. And I could be even more, in the future. We just scratched the surface, but stay tuned, there is more to come 🙂

You can download the generated zip file here. It contains the website static files, the “images” folder, the text files uploaded to guide the model, an image_prompts.txt file where I pasted all the prompts GPT-4o used with DALL-E, and the “video” folder, with the images generated for the video.

No responses yet